Alongside with the AI Action Summit, the French Ministry of Defence organised Military Talks dedicated to AI in the defence sector on February 10 at 2:00 pm at the École Militaire.

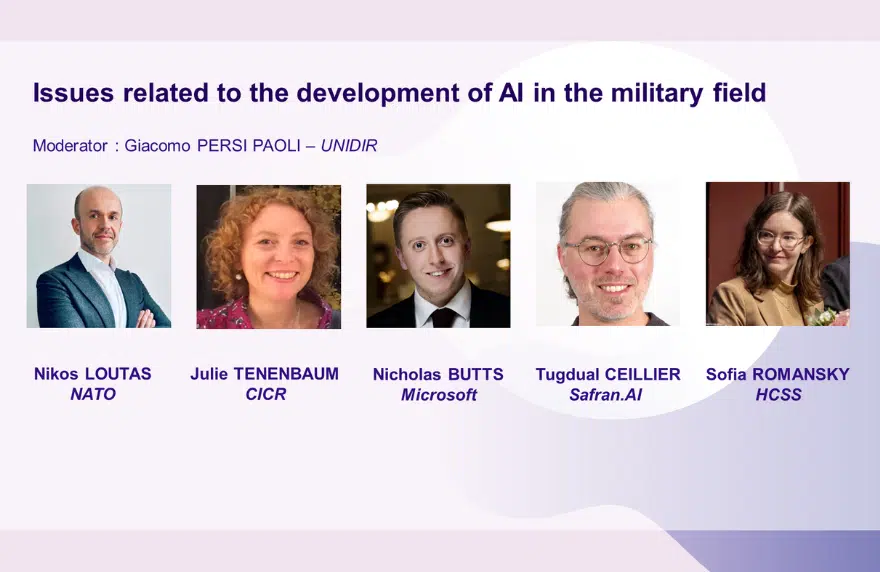

HCSS Strategic Analyst Sofia Romansky was asked to be a speaker on the panel: Issues related to the development of AI in the military field. Moderated by Giacomo Persi Paoli, one of our GC REAIM Experts.

Looking back at the event, Sofia stated: “The AI Action Summit in Paris has drawn significant attention, both for the state representatives that were in attendance as well as several key outcome documents. Notably for conversations around the governance of artificial intelligence, the AI Action Summit produced a Statement on Inclusive and Sustainable Artificial Intelligence for People and the Planet and a Declaration on Maintaining Human Control in AI Enabled Weapon Systems. 60 states were signatories to the Statement, notably with the support of China and India and the exception of the United States and the United Kingdom. The latter Declaration received less widespread support, being co-signed by 26 primarily European states.”

“Although both documents specify priorities and commitments, they do not establish concrete and immediate governance measures, which are lacking within the current global landscape. Nonetheless, the documents do signal a continued commitment of several key players to responsible AI frameworks. Additionally, while the Summit itself focused primarily on AI in the civilian domain, the event broke status quo by directly acknowledging and addressing the uses of AI technologies in the military domain, not only through the Declaration but also through the Military Talks side event, which brought together military practitioners, industry, and civil society,” Romansky continued.

“What the AI Action Summit ultimately demonstrates is that geopolitical dynamics and the corresponding calculations of state actors remain powerful driving forces behind the direction that AI governance and regulation is taking. Still, despite clear tensions and a purported AI arms race, an emerging common language to speak about AI risks and harms, as well as opportunities, is emerging. This demonstrates that opinions have not entirely diverged, and that there continues to be space for further deliberations.”

Concluding, Sofia noted: “Drawing on my experiences in the GC REAIM – Global Commission on Responsible Artificial Intelligence in the Military Domain as well as my research (several publications on the horizon), I emphasized three main takeaways, both in the panel and in the many conversations that followed:

1️⃣ In deliberations around international and national governance, it is necessary to always be aware of the geopolitical factors which sway decision-makers. AI contributes to uncertainty in the international arena, contributing to perceived competitition and unpredictability.

2️⃣ An achievement of international deliberations has been the identification of core principles that would ideally guide governance: accordance with international law, bias and harm mitigation… etc. Yet, the next step must be operationalization if initiatives such as REAIM are to continue having impact.

3️⃣ Operationalization of the identified principles could greatly benefit from anchoring discussions in the concept of ‘responsibility’, answering questions such as: who is responsible? In what contexts? To what effect? These questions will help us soberly assess what we actually wish to achieve with both AI technologies and corresponding guidelines.

Read more: AI Action Summit – Military Talks | Ministère des Armées